As I outlined in the last entry, sometimes the facts take you to a place you don’t want to go. Obviously the first thing to do is make sure you have your facts in order. But if the facts are truly “facts” rather than assertions, assumptions, or faulty conclusions, then you have a problem, and a decision to make. Do you accept that you now have to change your position, or do you decide to ignore the facts?

Interestingly, it isn’t necessarily a clear choice. Here’s a couple of examples.

Vaccines and autism.

Jenny McCarthy (among many others) believes that there is a correlation between vaccines (or more specifically, thimerosol, the preservative used for decades in vaccines) and autism; the contention is that the significant upswing in the number of autistic children today is caused (or at least worsened) by the vaccines given kids. There was a study that was published in 1998 in a respected medical journal by a Dr. Andrew Wakefield that apparently established this cause-and-effect relationship. So today many people have begun refusing to have their kids vaccinated for fear that they will develop autism as a result of exposure to thimerosol. These vaccines, by the way, are the reason that polio, whooping cough, chicken pox and other diseases that killed hundreds of thousands of kids in our past, are virtually nonexistent today. Or would be, except for this choice by a significant number of parents to not vaccinate their kids, which is making it possible that some of these terrible disease may resurface.

But here’s the thing: Wakefield’s study was unable to be replicated; even by him. His work was carefully reviewed by the journal that published his paper, which determined that he falsified the data. The study was withdrawn, he was exposed as a fraud and his license to practice medicine was revoked. Additional studies have shown no connection at all between exposure to thimerosol and autism; other models for the cause of autism are emerging. Yet the belief persists that vaccines cause autism. And many of the practitioners in my industry are strongly supportive of the anti-vaccination position. (Aside: my company takes no official position on the vaccination issue, but when the question comes up in my workshops I lay out the facts as we have them and let the audience decide.)

GMO (genetically modified organisms) in our food supply.

Scientists have figured out how to take genes from one organism and splice them into another. One of the first commercialized and perhaps best-known examples of this involves an herbicide called Roundup (glyphosate). A gene-spliced corn called “Roundup Ready” has been developed that is not affected by glyphosate, so it will grow in the presence of the herbicide. Whether glyphosate is as harmful as it is purported to be is not our topic here, but instead the gene-spliced corn. Lots of people in my area of business are concerned that the process of gene-splicing may have unintended (meaning: bad) consequences somewhere down the road.

Scientists have figured out how to take genes from one organism and splice them into another. One of the first commercialized and perhaps best-known examples of this involves an herbicide called Roundup (glyphosate). A gene-spliced corn called “Roundup Ready” has been developed that is not affected by glyphosate, so it will grow in the presence of the herbicide. Whether glyphosate is as harmful as it is purported to be is not our topic here, but instead the gene-spliced corn. Lots of people in my area of business are concerned that the process of gene-splicing may have unintended (meaning: bad) consequences somewhere down the road.

Another modified food: there is a fish that resists freezing even when the water around it freezes, because of a specific gene found in its DNA. Scientists have taken that gene and spliced it into strawberries, making the strawberries freeze-resistant. The non-GMO crowd (again, largely in my area of business) is very concerned about foods like this, calling them “Frankenfoods” and trying to get them banned. Most farmers are against these bans, or even of labeling the foods as GMO for obvious economic reasons: they can get higher crop yields using GMO plants. (The irony here is that virtually all the food we eat has been genetically modified; it’s just been done over many years by grafting or selective breeding, rather than in a laboratory.)

But the science is fairly clear that GMO foods are safe. Study after study has shown no detectable difference in the food quality, nutrient content, use in the human body, or any other known variable in GMO foods when compared to non-GMO foods. I suppose it would be more accurate to say “there has never been any indication that GMO foods are any different from non-GMO foods in how they are metabolized,” since it’s a fairly new area in research. But the anti-GMO position then takes advantage of the inherent logical impossibility of proving GMO foods could never cause a problem. And while technically that statement is true, again, it’s a logical impossibility to prove.

So we have two situations where the facts seem clear: there is no evidence that autism is linked to vaccinations, or that genetically-modified foods are harmful. But my particular branch of health care insists on believing the opposite in spite of the lack of evidence.

I must also point out that, while there may not be any scientific support for avoiding GMO ingredients, the perception on the part of the marketplace that GMO ingredients are bad may still drive the decision to use all non-GMO ingredients. In point of fact that is exactly what my company is doing.

But it is a decision based on market demand and not scientific facts.

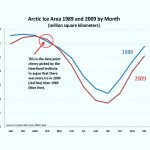

Even a casual observation would show that the ice is less in 2009 than it was even 20 years prior. But for one (very) brief moment (in March, where the arrow is pointing), the lines crossed and it seemed to show that the ice pack was ever-so-slightly greater in 2009 than 1989.

Even a casual observation would show that the ice is less in 2009 than it was even 20 years prior. But for one (very) brief moment (in March, where the arrow is pointing), the lines crossed and it seemed to show that the ice pack was ever-so-slightly greater in 2009 than 1989.

(1642-1726 or 7), and followed in Newton’s footsteps. Recall that Newton is widely considered one of the (if not THE) greatest scientist who ever lived, leading the Scientific

(1642-1726 or 7), and followed in Newton’s footsteps. Recall that Newton is widely considered one of the (if not THE) greatest scientist who ever lived, leading the Scientific rst century C.E. and developed a model to describe the workings of the universe that lasted for nearly a millennia and a half. By all accounts Ptolemy was a smart guy; he wrote important treatises in mathematics and geology in addition to astronomy. He just got it exactly wrong when it came to astronomy; he said that the earth was at the center of the universe, and everything revolved around us. Given our personal experience, it seems a reasonable conclusion: the earth doesn’t feel to us like it’s moving, and we can clearly see the objects in the night sky slowly move from one horizon to the other each night. As we all know however, that’s not what happens. But it took a long time and no little pain (both mental and physical) to change that, which gives us some idea of how people respected Ptolemy’s work.

rst century C.E. and developed a model to describe the workings of the universe that lasted for nearly a millennia and a half. By all accounts Ptolemy was a smart guy; he wrote important treatises in mathematics and geology in addition to astronomy. He just got it exactly wrong when it came to astronomy; he said that the earth was at the center of the universe, and everything revolved around us. Given our personal experience, it seems a reasonable conclusion: the earth doesn’t feel to us like it’s moving, and we can clearly see the objects in the night sky slowly move from one horizon to the other each night. As we all know however, that’s not what happens. But it took a long time and no little pain (both mental and physical) to change that, which gives us some idea of how people respected Ptolemy’s work. Around the 1500’s, our aforementioned Nicolai Copernicus (1473-1543) made the observation that the earth does not in fact sit still; it revolves around the sun once a year. He got much of the rest wrong; he thought that the sun, not the earth was the center of universe, and all the stars spin around the sun. (Nerdly aside: the notion that the earth is the center of the universe is called “geocentrism;” the belief that the sun is at the center of the universe is called “heliocentrism.”) Given that lens-grinding was still in its infancy and the first telescopes were just beginning to be developed, I think Copernicus can be forgiven for his mistakes. Especially since it had been “common understanding” for 15 centuries that Ptolemy had it right. Over the next couple of hundred years more and more very smart people started looking differently at the stars and the picture we know to be true today gradually emerged. But as I said, it didn’t happen overnight. In fact, there was considerable resistance to this novel concept, and not just from the Ptolemy-loyalists among the other night-sky observers.

Around the 1500’s, our aforementioned Nicolai Copernicus (1473-1543) made the observation that the earth does not in fact sit still; it revolves around the sun once a year. He got much of the rest wrong; he thought that the sun, not the earth was the center of universe, and all the stars spin around the sun. (Nerdly aside: the notion that the earth is the center of the universe is called “geocentrism;” the belief that the sun is at the center of the universe is called “heliocentrism.”) Given that lens-grinding was still in its infancy and the first telescopes were just beginning to be developed, I think Copernicus can be forgiven for his mistakes. Especially since it had been “common understanding” for 15 centuries that Ptolemy had it right. Over the next couple of hundred years more and more very smart people started looking differently at the stars and the picture we know to be true today gradually emerged. But as I said, it didn’t happen overnight. In fact, there was considerable resistance to this novel concept, and not just from the Ptolemy-loyalists among the other night-sky observers. particularly The Church) Galileo Galilei (1564-1642) was born. Galileo was strongly influenced by Copernicus, and in fact expanded significantly on Copernicus’ observations about heliocentrism, but in so doing he ran afoul of The Church.

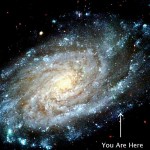

particularly The Church) Galileo Galilei (1564-1642) was born. Galileo was strongly influenced by Copernicus, and in fact expanded significantly on Copernicus’ observations about heliocentrism, but in so doing he ran afoul of The Church. We’re on the fringes of our galaxy, the Milky Way (a spiral-shaped galaxy of no particular note other than the obvious fact that it’s where we live). When we look up in the night sky like I did as a kid in Illinois, the swath of lighter sky is the center of our galaxy; we’re look back toward it from where we are in one of the outer arms. Our Milky Way is somewhere between 100,000 and 180,000 light-years across. Again, that means that light from a star on the other side of our galaxy left there up to 180,000 years ago and is just reaching us now.

We’re on the fringes of our galaxy, the Milky Way (a spiral-shaped galaxy of no particular note other than the obvious fact that it’s where we live). When we look up in the night sky like I did as a kid in Illinois, the swath of lighter sky is the center of our galaxy; we’re look back toward it from where we are in one of the outer arms. Our Milky Way is somewhere between 100,000 and 180,000 light-years across. Again, that means that light from a star on the other side of our galaxy left there up to 180,000 years ago and is just reaching us now. Take a giant step backward: now the scale of distance becomes nearly impossible to grasp. I read an article in the news a couple of weeks ago indicating that astronomers have now come to understand that our galaxy and associated Local Group structures are part of a “supercluster” of galaxies that’s been named Laniakea. Our Milky Way is located in a remote arm of that. (See the small red dot in the right-center of the artist’s representation of Laniakea to the right? That’s covering the Milky Way). This Super Cluster is about 50 times bigger than our Local Group.

Take a giant step backward: now the scale of distance becomes nearly impossible to grasp. I read an article in the news a couple of weeks ago indicating that astronomers have now come to understand that our galaxy and associated Local Group structures are part of a “supercluster” of galaxies that’s been named Laniakea. Our Milky Way is located in a remote arm of that. (See the small red dot in the right-center of the artist’s representation of Laniakea to the right? That’s covering the Milky Way). This Super Cluster is about 50 times bigger than our Local Group.

We’re watching a political party implode.

The last debate would have been hilarious, if it wasn’t part of the process of selecting a candidate for the most powerful job in the world. This person will literally have the capacity to destroy civilization and send the few human survivors back to the stone age, and they’re calling each other names and comparing dick size.

In the run-up to the actual primary process, the conventional wisdom was that it would be Jeb Bush vs Hillary Clinton. On the Republican side, Ted Cruz, Marco Rubio and a whole bunch of others were expected to make it interesting, and for the Democrats is always Hillary’s to lose, but at the end of the day it was widely expected to be a contest between the two party Brahmins. As it turned out, Bernie Sanders has made a surprisingly strong showing against Clinton, while virtually everyone on the Republican side has been stunned by Trump’s dominance. He was expected to self-immolate very early on, but the more outrageous and hate-filled things that come out of his mouth the better his followers like it. He’s even said they are so loyal he could shoot someone in the middle of Time Square and they’d still vote for him. I don’t know which is scarier: the disregard he has for his own followers’ critical thinking skills, or the fact that he was probably correct.

Anyhow, it was Jeb who never got off the ground, and it currently looks like the Republican nomination is Trump’s to lose. So the leaders of the Republican Party are throwing everything they have at Trump to try to prevent that from happening. They see that he represents the loss of the control of the party, and that terrifies them.

Of course the proximal cause is Trump’s ascendancy and apparently clear path to the Republican nomination, but as I imply, that’s just the most visible. I think this started following the shellacking they got with Barry Goldwater in the presidential election of 1964 when the Republican party wonks realized that they had to expand their constituency to have a hope of winning (let alone keeping) the White House and Congress in the future. They did the simple math that revealed that there were more Democrats than Republicans and that was only going to become more pronounced as the demographics of the country shifted. They were going to have to somehow expand their appeal from their current base of white (predominately male), wealthy upper and middle class voters in order to keep from being forever marginalized.

So they sold their souls to the Devil.

Or more accurately, to the Fundamental Christians. To appeal to them, they decided to emphasize what their focus groups told them were hot buttons for this group, so they positioned themselves as the party that would protect people from the Godless Communist hordes (strong on national defense), the criminal drug-users (strong on crime) and the destruction of the American family (anti-abortion, pro-traditional family with Dad as the breadwinner head of the house and Mom in a stay-at-home supportive role). With the exception of that last, it wasn’t much of a stretch from earlier positions; and as I think about it even the pro-traditional family stance was really just finding a parade to march in front of. After all, who would be opposed to a strong family? But in order to make that work, they had to make it appear that they were most closely aligned with that demographic; no little feat when their party was predominately rich, white and male, and their target was working class, less educated, and both men and women. The only commonality up until then was race. S0 they needed to stir up fear and create a siege mentality by saying that family values and Christianity were (and are) under attack. From whom or what is never clearly stated, except a vague “elitist secular agenda,” whatever that means. We are constantly reminded that “This country was founded on Christianity and the Bible!” and of course this is exactly wrong; the Founding Fathers may have incorporated Christian principles into the Bill of Rights, but they specifically and painstakingly avoided an official religion, be it one of the many branches of Christianity or any other, monotheistic or polytheistic. The Treaty of Tripoli (penned in 1796, ratified by Congress and signed by President John Adams) goes so far as to explicitly state that “The Government of the United States of America is not, in any, sense founded on the Christian religion” (Article 11). Not sure how it could have been stated more plainly.

The irony is of course lost on the Wing Nuts out there when they trumpet our “Christian Constitutional Foundation.”

Anyhow, back to our Republican decision 50 some-odd years ago and the implosion of today. So they stirred up the evangelicals, got them to vote reliably Republican with nonsense about an attack on Christian Values from some unnamed “elitist” group and used their votes to stay in power. We’ve had Reagan, two Bush’s with a Gingrich-led rebellion in between, and now Ted Cruz. Today a person running under the Republican ticket has no hope unless they declare themselves unequivocally anti-abortion, pro-gun and pro Christ Jesus.

The problem is that the Republicans wonks post-Goldwater never really wanted the party to become so socially conservative. True, most Americans (myself among them) are not pro-abortion. I think life is precious and should be treated as such. But I’m MUCH more opposed to being told by a politician when life begins, or what my wife and I can or cannot do in what is at its core a profoundly personal decision. Those guys 50-plus years ago wanted the votes that would allow them to get elected, but wanted to keep the party the way it was: fiscally conservative, favoring (and run by) wealthy, nearly all white, old men.

They are horrified by Trump and the fact that he’s taken over the party. And Ted Cruz would be just as bad, from their perspective; he’s known for his refusal to compromise on anything, even within his own party. Lindsey Graham said that the Trump/Cruz choice is like deciding whether you’d rather be shot or poisoned. That’s why we saw Mitt Romney being trotted out to bash Trump. Not because they want Cruz to win, but almost anyone would be better than Trump.

Except, of course Hillary. Or Bernie. Either way, it’s a disaster for the Republican Establishment. But they brought it on themselves.

Be careful what you wish for.